A few weeks ago I was looking through the job boards and found a contract role that sparked an interest. A company was looking for a .NET contractor to develop a prototype system to transcribe videos. It was a two week contract and for something that seemed to be quite a niche project I thought that was a bit short. Video transcription wasn't something that I'd ever tried to do so I thought I'd do a bit of research. What I found was that it was quite achievable in that time-frame which really surprised me. Within a couple of hours I'd managed to create an app that would transcribe the speech from videos and output it to the console.

My first attempt didn't go very well. I'd 'Googled' the problem and found lots of examples that used Windows built-in speech recogniser. I couldn't get it to recognise even the clearest of speech. I just ended up with a string of seemingly random words at the end of each test. The other disadvantage of this method is that it ties any application to running on the Windows platform. I'm a fan of running applications on containerised instances. With Windows I need more processing power, more memory and more storage space than my go-to Linux distribution, Alpine. I also need to factor in the licensing cost of Windows.

After some more research I chose to try AI Speech from the Azure AI services. This is a paid service but has a free tier with some limitations.

Azure AI Speech Service

The Azure AI Speech Service has many uses including:

- Speech to text conversion

- Text to speech conversion

- Speech translation (to speech or text in a different language)

- Intent recognition (e.g. does a user want to order a pizza or check the weather)

- Keyword recognition (e.g. Hey Google)

Here we're going to concentrate of the speech to text conversion, which can be performed by several methods:

- Real-time

- Fast (this is a preview feature and is only available by REST API)

- Batch

Real-time

This is the method that we'll be using as it's quick and easy to develop a prototype. It may not be the best method though for our use-case as we may want to process files in batches and also have them transcribed at a faster rate.

Fast

This transcribes much faster than real-time but is currently only available as a preview REST API. If this had reached general availability then it would probably be the best choice.

Batch

This transcribes batches of files from Azure Blob Storage. At peak times it can take over 30 minutes for the batch job to start. This may suit the project, depending on their use-case, but we want to demonstrate this quickly so it's not for us.

Azure AI Speech

Azure AI Speech Pricing

The Implementation

The first thing that we'll need is a Speech Service in Azure AI Services. If you haven't already set one up then I suggest that you take a look at the Free (F0) pricing tier. Just the basic configuration should be fine, you can leave the Network, Identity and Tags sections alone unless you're looking for a more complex setup.

Once you have your Speech Service, then make a note of one of the keys available from the overview tab and also the region.

Now let's fire up Visual Studio and create a new C# console app.

Configuration

The first thing that we'll do it create a class to hold the configuration settings. We'll call it AzureSpeechServiceConfig.

public class AzureSpeechServiceConfig

{

public string Key { get; set; }

public string Region { get; set; }

}

We'll be storing these as User Secrets, so right-click your project and select 'Manage User Secrets' then enter the values. You should be prompted to install the Microsoft.Extensions.Configuration.UserSecrets NuGet package, if not install it manually. You'll also need to install the 'Microsoft.Extensions.Configuration.Binder' package so that the configuration can be bound to the config class.

{

"AzureSpeechService": {

"Key": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"Region": "uksouth"

}

}

In our Program.cs file we'll then need to map the config to our AzureSpeechServiceConfig class.

using Microsoft.Extensions.Configuration;

using VideoSpeechToText.Console; //namespace of your console app

IConfigurationRoot config = new ConfigurationBuilder()

.AddUserSecrets<Program>()

.Build();

var azureSpeechServiceConfig = config

.GetSection("AzureSpeechService")

.Get<AzureSpeechServiceConfig>();

Speech to Text Conversion

This is the main part of the process. We'll be converting the audio from files to text. Azure Speech Services expects real-time audio to come either from a microphone or a .wav file. We'll be using the .wav file option. Video files can be dealt with at a later stage.

I like to think ahead to the future. We're using Azure today but we could be using AWS or Google Cloud tomorrow. Let's create an interface for a Speech Transcriber that ensures, regardless of implementation, that we have the minimum required to process the file and return the result.

public interface ISpeechTranscriber

{

Task<string> TranscribeAsync(

string filePath,

string fileName,

CancellationToken cancellationToken = default

);

}

Now we can look at the implementation. Install the Microsoft.CognitiveServices.Speech package which serves as a wrapper to the API. We can then create an AzureSpeechTranscriber class that implements ISpeechTranscriber, taking our AzureSpeechServiceConfig and mapping it to a Microsoft.CognitiveServices.Speech.SpeechConfig object.

public class AzureSpeechTranscriber : ISpeechTranscriber

{

private readonly SpeechConfig _speechConfig;

public AzureSpeechRecogniser(

AzureSpeechServiceConfig config

)

{

_speechConfig = SpeechConfig.FromSubscription(

config.Key,

config.Region

);

}

public async Task<string> TranscribeAsync(

string filePath,

string fileName,

CancellationToken cancellationToken = default

)

{

throw new NotImplementedException();

}

}

In order to use the Speech Recogniser in Azure Speech Services we need an AudioConfig object. This will tell the Speech Recogniser where the input is coming from. In our case it's a .wav file, but it could be a microphone or a stream. We'll create a private function that takes the AudioConfig object and call it from our public method after setting input to the .wav file.

public class AzureSpeechTranscriber : ISpeechTranscriber

{

private readonly SpeechConfig _speechConfig;

public AzureSpeechRecogniser(

AzureSpeechServiceConfig config

)

{

_speechConfig = SpeechConfig.FromSubscription(

config.Key,

config.Region

);

}

public async Task<string> TranscribeAsync(

string filePath,

string fileName,

CancellationToken cancellationToken = default

)

{

string fullFilePath = Path.Combine(filePath, fileName);

AudioConfig audioConfig = AudioConfig.FromWavFileInput(fullFilePath);

return await RecogniseSpeechAsync(audioConfig);

}

private async Task<string> RecogniseSpeechAsync(

AudioConfig audioConfig

)

{

throw new NotImplementedException();

}

}

Looking at the documentation we should simply be able to call the SpeechRecogniser.RecogniseOnceAsync() method.

private async Task<string> RecogniseSpeechAsync(

AudioConfig audioConfig

)

{

SpeechRecognizer speechRecognizer = new(_speechConfig, audioConfig);

SpeechRecognitionResult result = await speechRecognizer.RecognizeOnceAsync();

return result.Text;

}

However, this won't recognise the whole file. Later in the documentation it states that ' single-shot recognition' only 'recognizes a single utterance' and that 'The end of a single utterance is determined by listening for silence at the end or until a maximum of 15 seconds of audio is processed'. This means that at most we'll get 15 seconds transcribed, but if there is a pause in speech it could be shorter.

In order to transcribe the whole file we need to use 'continuous recognition'. This involves subscribing to events. These events are:

- Recognizing: Signal for events that contain intermediate recognition results.

- Recognized: Signal for events that contain final recognition results, which indicate a successful recognition attempt.

- SessionStopped: Signal for events that indicate the end of a recognition session (operation).

- Canceled: Signal for events that contain canceled recognition results. These results indicate a recognition attempt that was canceled as a result of a direct cancelation request. Alternatively, they indicate a transport or protocol failure.

The main event that we're interested in is Recognised as we need to use that to append the next lot of text to our result. We'll also want to subscribe to SessionStopped and Canceled so that we can tell when transcription has finished or if an error has occurred.

Our modified function looks like this.

private async Task<string> RecogniseSpeechAsync(

AudioConfig audioConfig

)

{

SpeechRecognizer speechRecognizer = new(_speechConfig, audioConfig);

var stopRecognition = new TaskCompletionSource<int>();

string text = string.Empty;

speechRecognizer.Recognizing += (s, e) =>

{

// no need to do anything here

};

speechRecognizer.Recognized += (s, e) =>

{

if (e.Result.Reason == ResultReason.RecognizedSpeech)

{

text = text + e.Result.Text + " ";

}

else if (e.Result.Reason == ResultReason.NoMatch)

{

// ignore it

}

};

speechRecognizer.Canceled += (s, e) =>

{

stopRecognition.TrySetResult(0);

};

speechRecognizer.SessionStopped += (s, e) =>

{

stopRecognition.TrySetResult(0);

};

await speechRecognizer.StartContinuousRecognitionAsync();

// Waits for completion. Use Task.WaitAny to keep the task rooted.

Task.WaitAny(new[] { stopRecognition.Task });

return text.Trim();

}

We can now call our AzureSpeechTranscriber from Program.cs and see if it works. Test files for speech recognition can be found on Kaggle.

using Microsoft.Extensions.Configuration;

using VideoSpeechToText.Console;

IConfigurationRoot config = new ConfigurationBuilder()

.AddUserSecrets<Program>()

.Build();

var azureSpeechServiceConfig = config

.GetSection("AzureSpeechService")

.Get<AzureSpeechServiceConfig>();

ISpeechTranscriber mySpeechRecognizer = new AzureSpeechTranscriber(azureSpeechServiceConfig);

string text = await mySpeechRecognizer.TranscribeAsync("C:\\Test", "harvard.wav"); // 18s clear speech

Console.WriteLine(text);

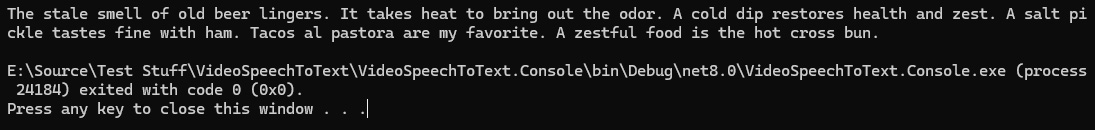

The result:

Great! It works and it's pretty accurate.

Great! It works and it's pretty accurate.

Dealing with video files

We've currently only solved part of the problem. We now need the ability to transcribe a video file. Let's extract the audio from a video and save it out as a .wav file. We can then read this file instead of the original one. I tried to bypass this step and use streams instead, what I found was that this didn't work. In fact I couldn't even get the Speech Recogniser to work with a .wav file loaded into a stream, although I did not spend much time on this.

We'll create another interface, for a Video to Wav Converter. This will allow us to easily switch libraries should one be discontinued or we find performance issues with it etc.

public interface IVideoToWavConverter

{

Task ConvertToWavFileAsync(

string inputFilePath,

string outputFilePath,

CancellationToken cancellationToken = default

);

}

The NAudio package provides some great utilities for converting audio and video. We'll use that to perform the conversion. Here we simple read the original file, perform the conversion in memory and then write the new file.

public class VideoToWavConverter : IVideoToWavConverter

{

public async Task ConvertToWavFileAsync(

string inputFilePath,

string outputFilePath,

CancellationToken cancellationToken = default

)

{

var fileBytes = await File.ReadAllBytesAsync(inputFilePath, cancellationToken);

using (var video = new MediaFoundationReader(inputFilePath))

{

using (var memorySteam = new MemoryStream())

{

WaveFileWriter.WriteWavFileToStream(memorySteam, video);

byte[] bytes = memorySteam.ToArray();

await File.WriteAllBytesAsync(outputFilePath, bytes, cancellationToken);

}

}

}

}

Now we need to decide where to call the converter. We could do this in Program.cs or in AzureSpeechTranscriber. I'm going to opt for doing this in AzureSpeechTranscriber for the simple reason that if we changed the ISpeechTranscriber to a different implementation then it may become redundant. AWS or Google Cloud may work fine transcribing .mp4 files so we'll tie it to our Azure implementation.

public class AzureSpeechTranscriber : ISpeechTranscriber

{

private readonly SpeechConfig _speechConfig;

private readonly IVideoToWavConverter _videoToWavConverter;

public AzureSpeechTranscriber(

AzureSpeechServiceConfig config,

IVideoToWavConverter videoToWavConverter

)

{

_speechConfig = SpeechConfig.FromSubscription(

config.Key,

config.Region

);

_videoToWavConverter = videoToWavConverter;

}

public async Task<string> TranscribeAsync(

string filePath,

string fileName,

CancellationToken cancellationToken = default

)

{

string fullFilePath = Path.Combine(filePath, fileName);

bool convertToWavFile = !fullFilePath.EndsWith(".wav", StringComparison.InvariantCultureIgnoreCase);

if (convertToWavFile)

{

string newFilePath = Path.Combine(filePath, $"{Guid.NewGuid().ToString()}.wav");

await _videoToWavConverter.ConvertToWavFileAsync(fullFilePath, newFilePath);

fullFilePath = newFilePath;

}

AudioConfig audioConfig = AudioConfig.FromWavFileInput(fullFilePath);

return await RecogniseSpeechAsync(audioConfig);

}

private async Task<string> RecogniseSpeechAsync(

AudioConfig audioConfig

)

{

... // omitted for brevity

}

}

We then update Program.cs to pass the converter.

using Microsoft.Extensions.Configuration;

using VideoSpeechToText.Console;

IConfigurationRoot config = new ConfigurationBuilder()

.AddUserSecrets<Program>()

.Build();

var azureSpeechServiceConfig = config

.GetSection("AzureSpeechService")

.Get<AzureSpeechServiceConfig>();

IVideoToWavConverter videoToWavConverter = new VideoToWavConverter();

ISpeechTranscriber mySpeechRecognizer = new AzureSpeechTranscriber(

azureSpeechServiceConfig,

videoToWavConverter

);

string text = await mySpeechRecognizer.TranscribeAsync("C:\\Test", "news.mp4"); // 3m23s BBC news broadcast

Console.WriteLine(text);

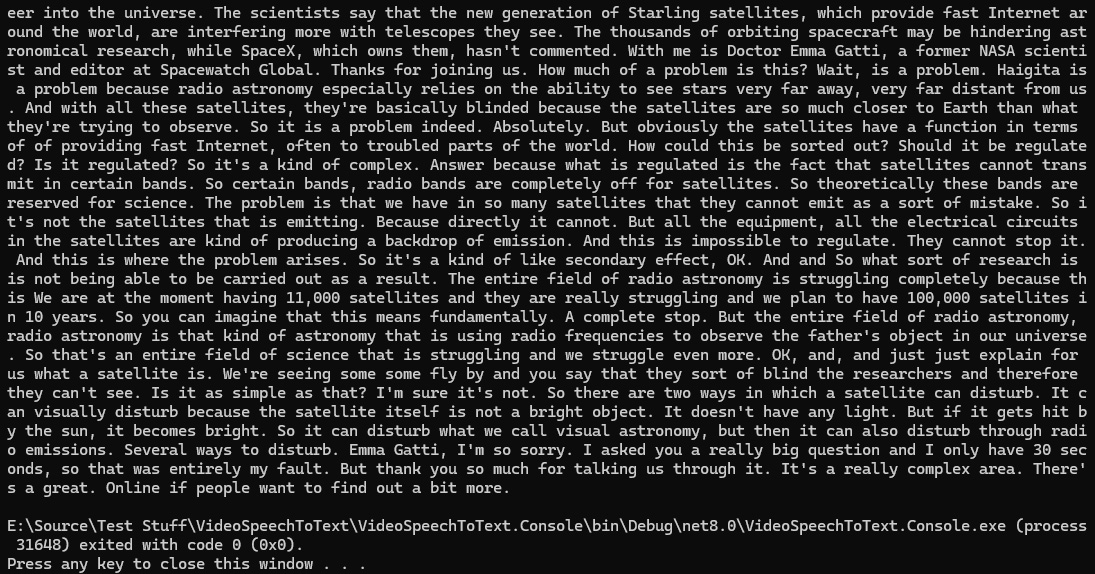

Running the program gives us the result:

Again, it's worked well. Pretty accurate although some of the punctuation could be better.

Again, it's worked well. Pretty accurate although some of the punctuation could be better.

Additional Configuration

There are a couple of features that can be configured through the SpeechConfig object.

Profanity Filtering

There is a built-in profanity filter that is enabled by default. Profane words are masked with asterisk (*) characters, but this can be configured to have them removed or give the raw transcription.

speechConfig.SetProfanity(ProfanityOption.Masked);

speechConfig.SetProfanity(ProfanityOption.Removed);

speechConfig.SetProfanity(ProfanityOption.Raw);

Dictation

By setting the 'dictation' mode punctuation will be explicitly recognised. So by saying 'Would you like some tea question mark' the result would be 'Would you like some tea?', or 'red slash white slash blue' would become 'red/white/blue'.

speechConfig.EnableDictation();

Conclusion

The Azure Speech Service provides us with a great way to transcribe audio and video files. It works in real-time so we could also use it transcribe live events. The accuracy is also very good, given that it's having to work with multiple languages and dialects of them.

I spent less than 3 hours working on a prototype. It actually took me more time to write this article than to develop a prototype.

Unfortunately, I didn't hear back from my application for the contract role. It was a great learning experience though and something that I can put into use in the future.

I think that Azure AI speech services are great and can't wait to learn more about them.

Source Code

The source code for this article can be found on Github.

Support me

If you liked this article then please consider supporting me.